主要内容:

- 花分类数据集下载

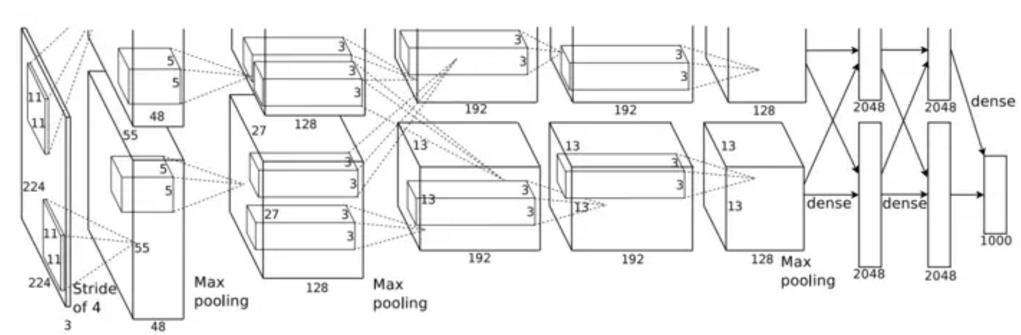

- AlexNet网络基本结构及具体实现

- 用AlexNet网络对花分类数据集进行训练

- 我啥时候能学到ResNet呀。。。

AlexNet

网络亮点:

- 首次使用GPU进行网络加速训练

- 使用ReLU激活函数

- 使用LRN局部响应归一化

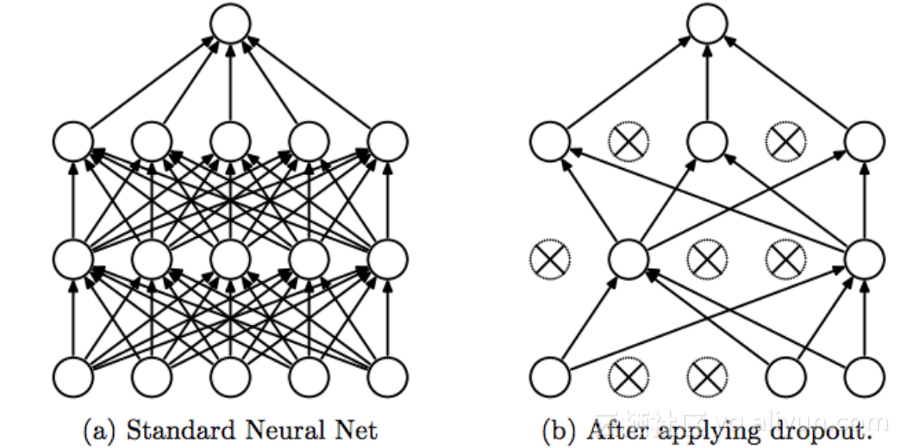

- 全连接层的前两层使用Dropout随及失活神经元操作,以减少过拟合

Dropout:在网络正向传播过程中随机失活一部分神经元(减少网络训练参数,解决过拟合问题)

花分类数据集下载

数据集下载地址:http://download.tensorflow.org/example_images/flower_photos.tgz

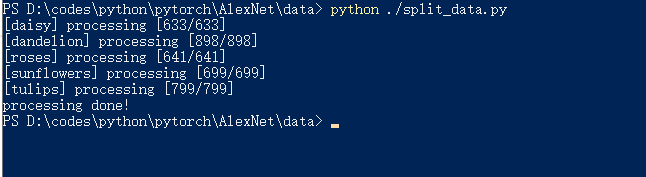

将下载的文件解压到./data/flower_data/下面,在./data目录按住shift+右键,在powershell里面执行split_data.py文件,进行数据分类。代码如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39import os

from shutil import copy

import random

def mkfile(file):

if not os.path.exists(file):

os.makedirs(file)

file = 'flower_data/flower_photos'

flower_class = [cla for cla in os.listdir(file) if ".txt" not in cla]

mkfile('flower_data/train')

for cla in flower_class:

mkfile('flower_data/train/'+cla)

mkfile('flower_data/val')

for cla in flower_class:

mkfile('flower_data/val/'+cla)

split_rate = 0.1

for cla in flower_class:

cla_path = file + '/' + cla + '/'

images = os.listdir(cla_path)

num = len(images)

eval_index = random.sample(images, k=int(num*split_rate))

for index, image in enumerate(images):

if image in eval_index:

image_path = cla_path + image

new_path = 'flower_data/val/' + cla

copy(image_path, new_path)

else:

image_path = cla_path + image

new_path = 'flower_data/train/' + cla

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

运行结果如下:

AlexNet网络搭建

网络参数

| layer_name | kernel_size | kernel_num | padding | stride | |

|---|---|---|---|---|---|

| Conv1 | 11 | 96 | [1,2] | 4 | |

| Maxpool1 | 3 | None | 0 | 2 | |

| Conv2 | 5 | 256 | [2,2] | 1 | |

| Maxpool2 | 3 | None | 0 | 2 | |

| Conv3 | 3 | 384 | [1,1] | 1 | |

| Conv4 | 3 | 384 | [1,1] | 1 | |

| Conv5 | 3 | 256 | [1,1] | 1 | |

| Maxpool3 | 3 | None | 0 | 2 | |

| FC1 | 2048 | None | None | None | |

| FC2 | 2048 | None | None | None | |

| FC3 | 2048 | None | None | None |

model.py

注:所用卷积核数为实际的二分之一1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self,num_classes=1000,init_weights=False):

super(AlexNet, self).__init))()

self.features=nn.Sequential(

nn.Conv2d(3, 48, 11, 4, 2),

nn.ReLU(inplace = True),

nn.MaxPool2d(3,2),

nn.Conv2d(48,128,5,1,2),

nn.ReLU(inplace = True),

nn.MaxPool2d(3,2),

nn.Conv2d(128,192,3,1,1),

nn.ReLU(inplace = True),

nn.Conv2d(192,192,3,1,1),

nn.ReLU(inplace = True),

nn.Conv2d(192,128,3,1,1),

nn.ReLU(inplace = True),

nn.MaxPool2d(3,2),

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128*6*6,2048),

nn.ReLU(inplace = True),

nn.Dropout(p=0.5),

nn.Linear(2048,2048),

nn.ReLU(inplace = True),

nn.Linear(2048,num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x=self.features(x)

x=torch.flatten(x,start_dim=1) # torch:[batch,channel,height,width],从第一维度也就是channel开始展平

x=self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m,nn.Conv2d):

nn.init.kaiming_normal_(m.weight,mode ='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias,0)

elif isinstance(m,nn.Linear):

nn.init.normal_(m.weight,0,0.01)

nn.init.constant_(m.bias,0)

train.py

1 | import torch |

predict.py

1 | import torch |